Hi All,

during PCM17, the Pentaho community meeting, I watched Uwe Geercken’s presentation about his Java Rule Engine (JaRE). The idea is to have business users maintain their own business rules and have the IT department only take care of IT logic. That should take load off IT’s shoulders and give power to the business users. As the business users are the business experts, data quality should improve as the rules are maintained directly by the business.

I am a Pentaho heavy user, so I will focus on the use with Pentaho data integration (PDI/Spoon/Kitchen). The rule engine can also compare the rules against an Apache NIFI stream, an Apache KAFKA stream and inside virtually any other Java application. JaRE is open source, available on Github, and can be used under Apache License 2.0

This blog post will cover the installation of the JaRE. In the coming days I will continue writing about maintaining the rules and about the PDI integration and usage.

JaRE Installation

I will install the JaRE on a clean UBUNTU 16.04.3 LTS virtual box. The OS is fully updated.

First of all we need to install the Tomcat Server, MariaDB-Server and GIT.

sudo apt-get install tomcat mariadb-server git

Next, we enter MariaDB

sudo mysql -uroot

and create the database and a maintaining user. Of course you should pick a more secure password. Just make sure to remember it. You’ll need it during the installation process.

CREATE DATABASE ruleengine_rules; CREATE USER 'rule_maintainer'@'localhost' IDENTIFIED BY 'maintainer_password'; GRANT ALL PRIVILIGES ON ruleengine_rules.* TO 'rule_maintainer'@'localhost'; FLUSH PRIVILEGES;

Exit MariaDB with ctrl+c

Now we download the ruleengine maintenance sql file to our filesystem and load it into the database we have just created

git clone https://github.com/uwegeercken/rule_maintenance_db.git sudo mysql ruleengine_rules -uroot < rule_maintenance_db/ruleengine_rules.sql

The database is installed now.

Next step is to download the ruleengine maintenance war file and copy it to the tomcat server

git clone https://github.com/uwegeercken/rule_maintenance_war.git cp rule_maintenance_war/rule_maintenance.war /var/lib/tomcat8/webapps

Now you can access the rule maintenance tool at

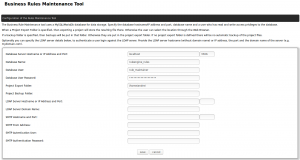

http://localhost:8080/rule_maintenance

On first start, the engine asks for the MariaDB username/password provided earlier and a path where you want to save the rule files.

Click ‘save’ to check the database connection. Next click Login. The default username is “admin”, the password is also “admin”

This concludes the installation tutorial. The next blog entry will be about maintaining the rules.

Update 12/2017

The installation process has been simplified now. There is no need any more to run the SQL file.